AI and the paperclip problem

By A Mystery Man Writer

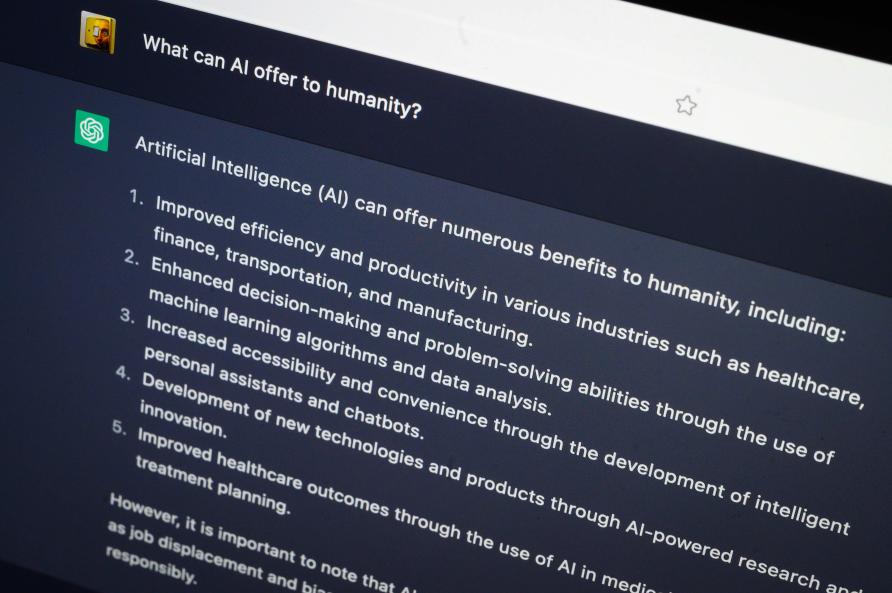

Philosophers have speculated that an AI tasked with a task such as creating paperclips might cause an apocalypse by learning to divert ever-increasing resources to the task, and then learning how to resist our attempts to turn it off. But this column argues that, to do this, the paperclip-making AI would need to create another AI that could acquire power both over humans and over itself, and so it would self-regulate to prevent this outcome. Humans who create AIs with the goal of acquiring power may be a greater existential threat.

Is AI Our Future Enemy? Risks & Opportunities (Part 1)

Artificial intelligence for international economists (by an

/cdn.vox-cdn.com/uploads/chorus_image/image/72457793/Vox_Anthropic_final.0.jpg)

to invest up to $4 billion in Anthropic AI. What to know about the startup. - Vox

What is the paper clip problem? - Quora

Enhance Search Capabilities with Azure Cognitive Search

Nicola Baldissin (@baldissin) / X

Paperclip Maximizer

AI's Deadly Paperclips

AI apocalypse or overblown hype? Pursuit by The University of Melbourne

AI Paperclip Problem and How nannyML plans to stop it

- School Smart Smooth Paper Clips, Jumbo, 2 Inches, Steel, 10 Packs with 100 Clips Each

- ACCO Smooth Standard Paper Clip, #3, Silver, 100/Box, 10 Boxes/Pack

- PAPERCLIP DAY - May 29, 2024 - National Today

- Paper Clips (film) - Wikipedia

- JAM Paper 1-in Clear Safety Pin/Clip (100-Pack) in the Specialty, push pin clips

- honey qiao Women Satin Skirts Long Floor Length High Waist Formal

- Buy Susie by SHYAWAY Women's Satin Trim Neckline Demi-Coverage

- LE PETIT BATEAU (EX CA) Yacht Charter Price - Custom Line Luxury

- PANTS-102291 M Rugged Flex® Relaxed Fit Canvas Work Pant (in Dark

- Nike Essential Cotton Stretch Boxer Brief, Dri-FIT 3Pk, Black, Small at Men's Clothing store