How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

By A Mystery Man Writer

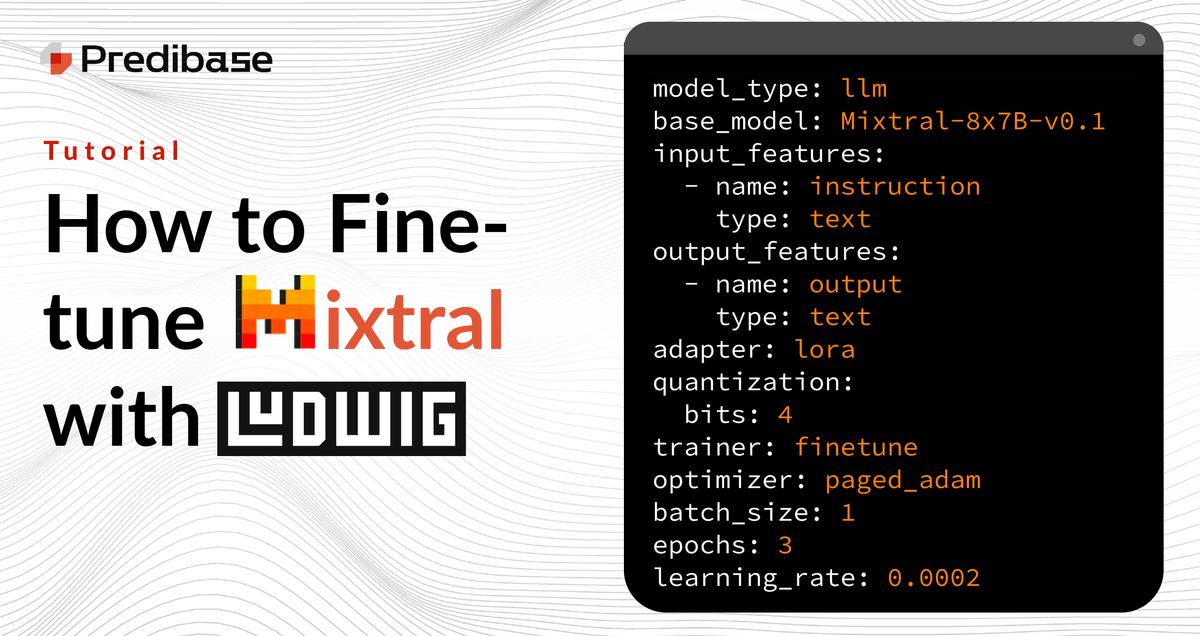

Learn how to reliably and efficiently fine-tune Mixtral 8x7B on commodity hardware in just a few lines of code with Ludwig, the open-source framework for building custom LLMs. This short tutorial provides code snippets to help get you started.

Predibase on X: Announcing Ludwig v0.8—the first #opensource low-code framework optimized for building and #finetuning LLMs on your data. 🎉 New features incl. fine-tuning, integrations w/ Deepspeed, parameter efficient fine-tuning (#LoRA), prompt

Fine Tune mistral-7b-instruct on Predibase with Your Own Data and LoRAX, by Rany ElHousieny, Feb, 2024

Travis Addair on LinkedIn: Introducing Predibase: the enterprise declarative machine learning platform

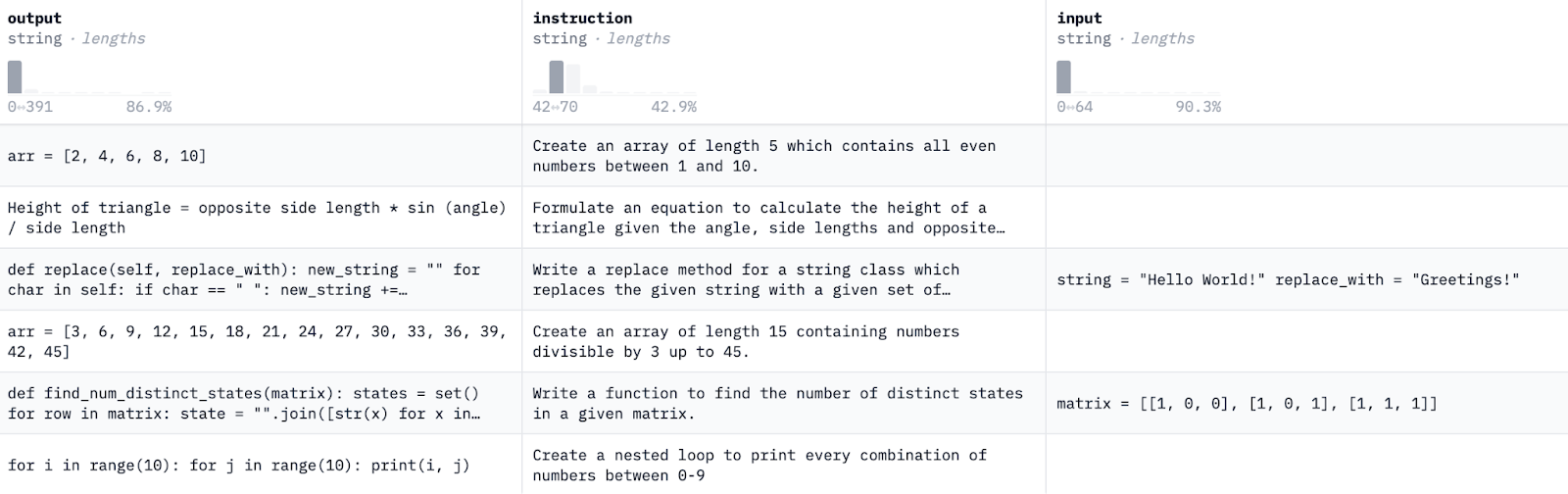

Fine-tuning Example

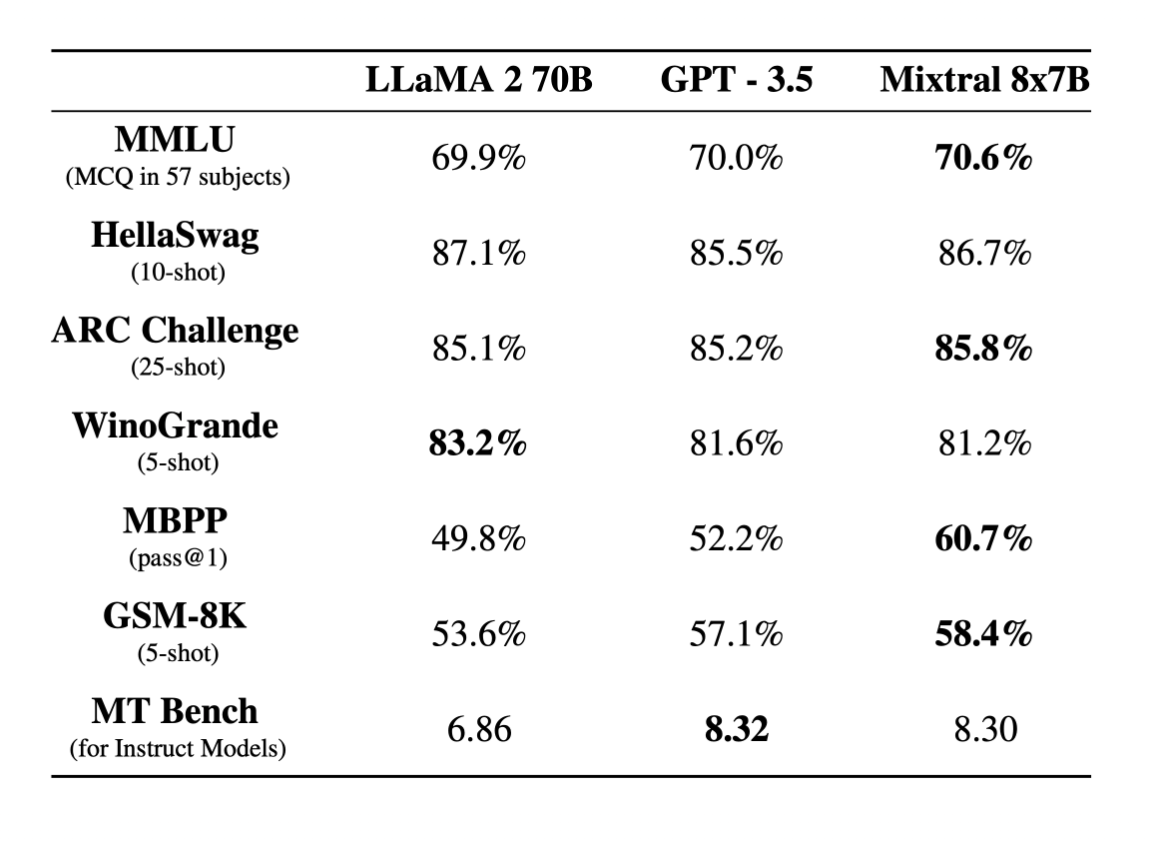

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

Predibase on LinkedIn: Langchain x Predibase: The easiest way to fine-tune and productionize OSS…

Travis Addair on LinkedIn: Getting the Best Zero-Shot Performance on your Tabular Data with LLMs -…

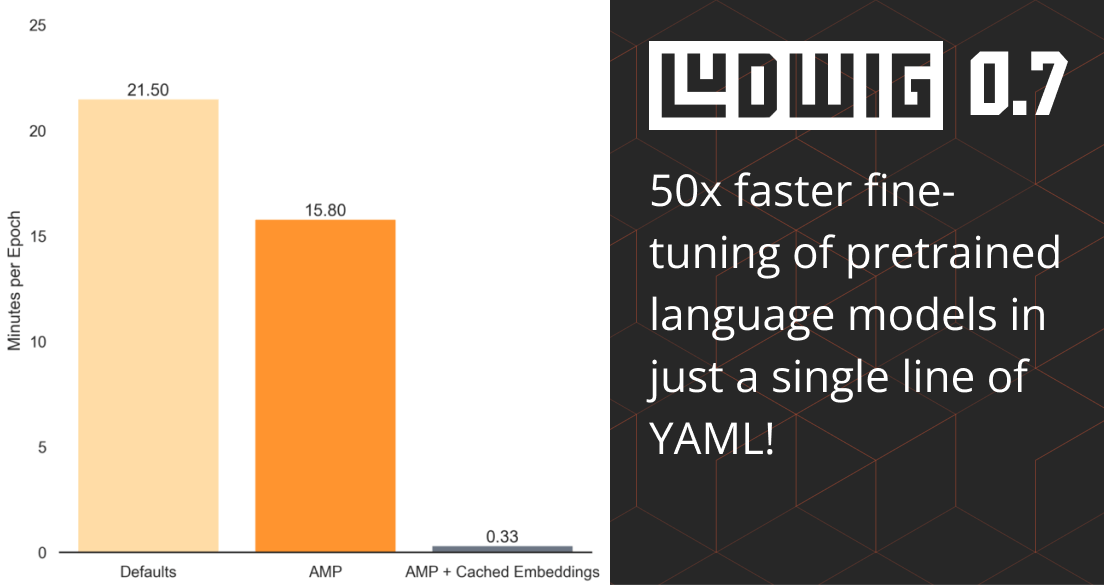

Ludwig v0.7: Fine-tuning Pretrained Image and Text Models 50x Faster and Easier - Predibase - Predibase

Predibase on LinkedIn: Low-Code/No-Code: Why Declarative Approaches are Winning the Future of AI

Little Bear Labs on LinkedIn: LoRAX: The Open Source Framework for Serving 100s of Fine-Tuned LLMs in…

How to Fine-tune Mixtral 8x7b with Open-source Ludwig - Predibase - Predibase

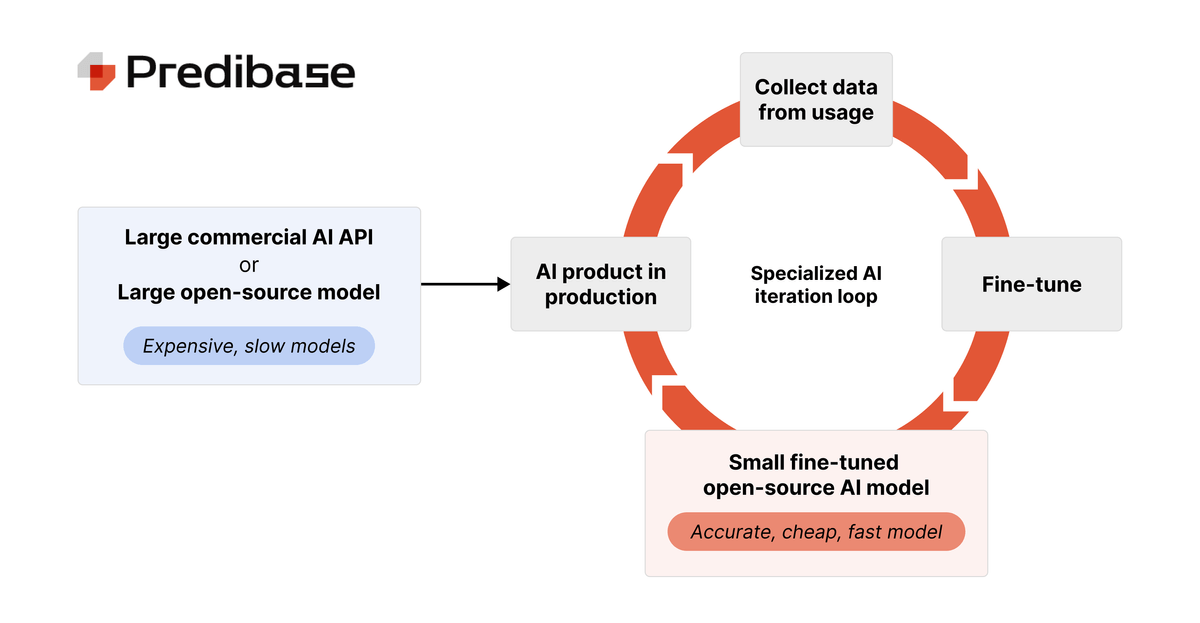

Graduate from OpenAI to Open-Source: 12 best practices for distilling smaller language models from GPT - Predibase - Predibase

Predibase on LinkedIn: Ludwig 0.8 - Build and fine-tune custom LLMs on your private data

- Sanrio Hello Kitty Training mat YOGA MAT Fitness 60×0.3×170cm from Japan NEW

- Mocassim Tratorado Feminino Versátil e Moderno - Paula Fiore - Mocassim Feminino - Magazine Luiza

- Richer Poorer Classic Bralette in Reflecting Pond – COMMUNION

- Playtex Love My Curves Amazing Shape Balconette Underwire Bra,Nude, 40DD

- ASOS DESIGN elasticated waist flippy mini skirt in black