DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

By A Mystery Man Writer

Last month, the DeepSpeed Team announced ZeRO-Infinity, a step forward in training models with tens of trillions of parameters. In addition to creating optimizations for scale, our team strives to introduce features that also improve speed, cost, and usability. As the DeepSpeed optimization library evolves, we are listening to the growing DeepSpeed community to learn […]

DeepSpeed: Microsoft Research blog - Microsoft Research

N] Improvement on model's inference from DeepSpeed team. [D] How is Jax compared? : r/MachineLearning

Pre-trained models: Past, present and future - ScienceDirect

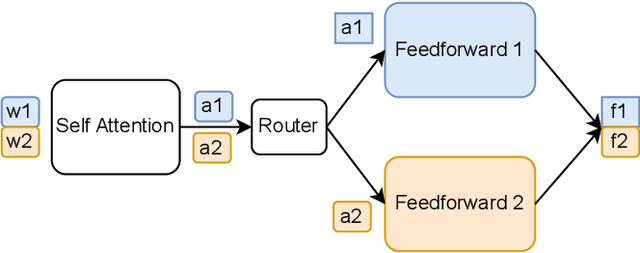

DeepSpeed: Advancing MoE inference and training to power next-generation AI scale - Microsoft Research

LLM(十二):DeepSpeed Inference 在LLM 推理上的优化探究- 知乎

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

DeepSpeed powers 8x larger MoE model training with high performance - Microsoft Research

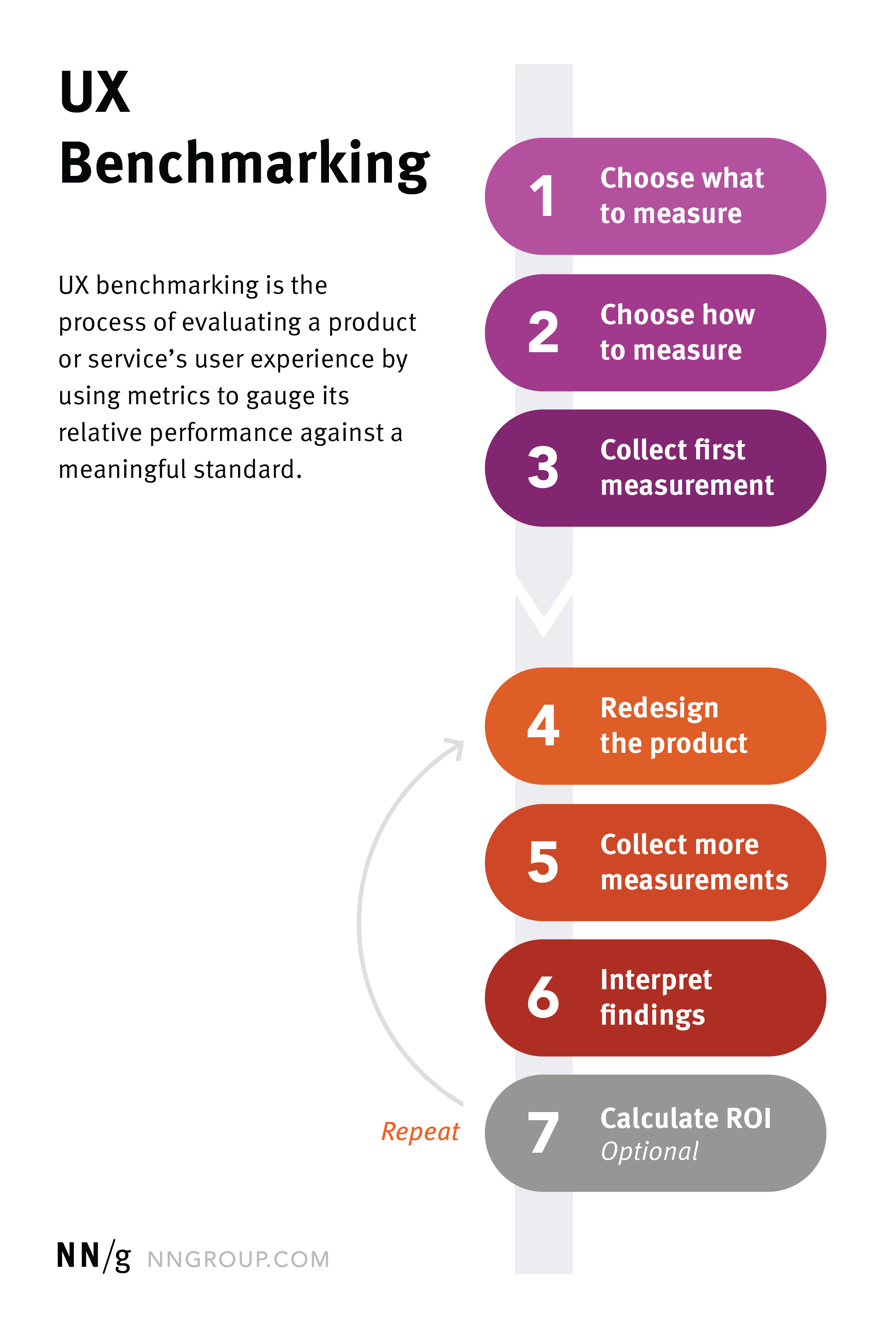

media.nngroup.com/media/editor/2020/07/31/ux-bench

Deploying Large NLP Models: Infrastructure Cost Optimization

Samyam Rajbhandari - CatalyzeX

DeepSpeed: Accelerating large-scale model inference and training via system optimizations and compression - Microsoft Research

- Vintage Cabin Creek Red White and Navy Blue Patriotic USA America Windbreaker Suit 1x/x-large 80's 90's

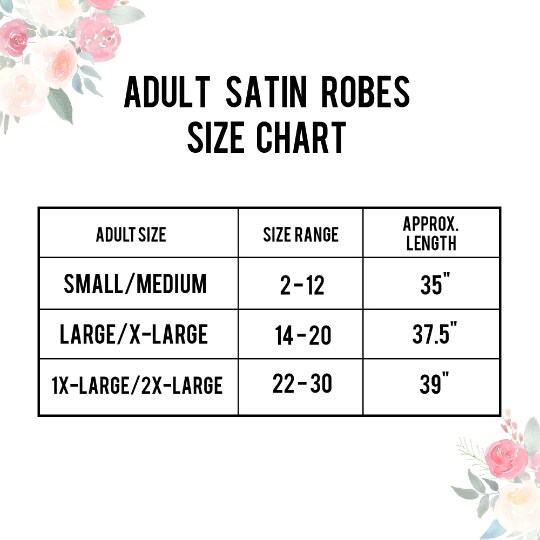

- Rhinestone Bride Satin Robe Getting Ready for Wedding Day, White Bridal Robe for Bridal Shower Gift

- Columbia Men's Last Tracks Jacket, Delta/Black, X-Large

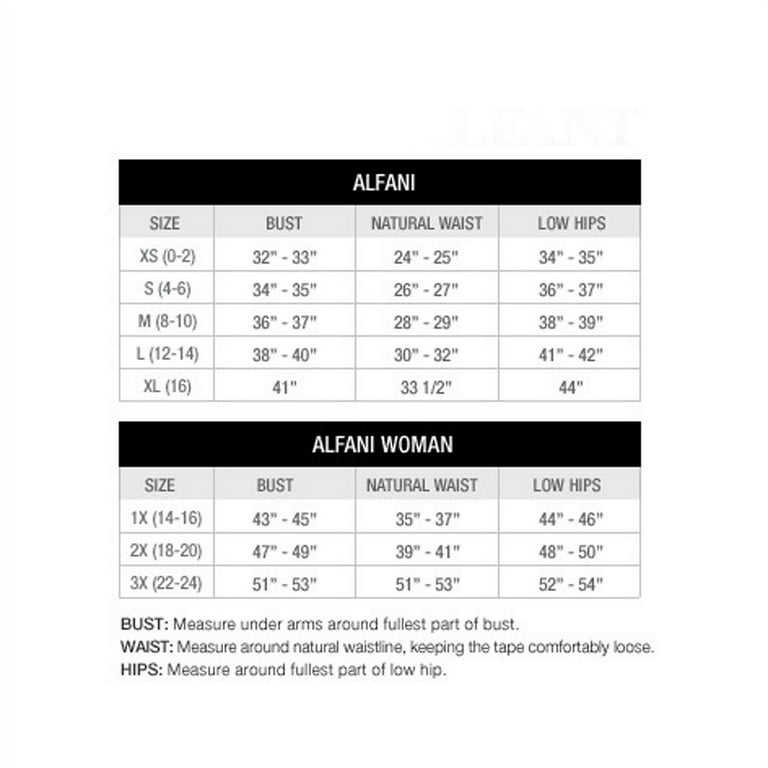

- Alfani Women's Asymmetrical Draped Mesh Top Black Size X-Large

- Dymo LabelWriter Extra Large Shipping Labels 104mm x 159mm

- Lydia Tank – Brandy Melville

- Size Chart

- Buy Selfcare Women's Non Padded White, Red and Black Pure 100% Soft Cotton Full Coverage Everyday Bra with Adjustable Straps (Pack of 3_SN0492-30A) at

- Liebe Lingerie - Top + jeans, é sempre uma pedida certa! Pic linda da @paulacastrobr ✨ . Sutiã Push Up Desert, ref.603130 . #liebelingerie #lingerie #sutiã #outwear

- The Brooks Adrenaline GTS 20 – Supporting runners for over 20 years